Thinking Of Using GPT 3? Here Is Crash Course

Generative Pre-trained Transformer 3 aka GPT3 is the latest state of the art NLP model offered by OpenAI. In this article, you will learn how to make the most of the model and Implement it in your product or personal project.

What makes me a credible source?

- Machine learning practitioner for over 5 years.

- Have been using GPT 3 for over 1.5 years.

- Consulted 4 startups to integrate GPT 3 in their product and personally used it in a lot of internal tools.

- Trained a similar model(GPT NeoX) from scratch

This is going to be a long article 😅. If you are here there is a good chance you might already know a few things that are mentioned in this article. There is a button to Jump to a particular section at the right bottom feel free to use it. Nonetheless, I am sure you will learn something that you didn’t know before.

So Let’s Get Started

What is GPT 3?

GPT 3 is a third generational Generative Pre-trained Transformer. This particular model is defined as a general intelligence autoregressive language model. To use this model efficiently, we need to fully understand the above terms. So, here they are in non-jargon terms. Do read this it will come in extremely handy.

- Generative - As the name implies generative models generate text from the input text provided. It’s like a friend who completes your sentences except it’s not annoying 😅. The model analyses your input (aka prompt) based on the information it has (the text it’s trained on). Predict the words one word at a time. After a word is predicted the input + the predicted word becomes the input and it goes on. It’s an oversimplification but you get the gist.

- Pre-trained - Well 🤷

- Transformer - A transformer is a type of neural network(You know the systems mimicking the Human Brain). These models are becoming the new standard in NLP. A model like this goes through data sequentially and learns about the context and relationship in the provided stream.

- General Intelligence- Most conventional models are designed for a specific task. Whereas a general purpose model like this can do multiple things but the output of GPT3 will always get delivered in a generative form. You can ask it to classify, fill in the blanks, etc.

- Autoregressive - Autoregression is how Generative models learn. Autoregressive models analyze the data fed into it and predict the outcome. An example could be a stock prediction model. So our GPT 3 does the same thing on the text(mostly text) it was trained on, which is a lot.

- Language Model - Language modeling uses statistics and probability to predict the outcome. This is how GPT 3 predicts the outcome.

Why GPT 3 is a game changer?

GPT 3 contains 175 Billion parameters trained on 45TB of data which reportedly cost 12 Million dollars for a single run and it will probably take tens of thousands of dollars to host it for a month. I can not start to comprehend the amount of time and money that went into the research.

So, Why GPT 3 is a game changer?

You can access millions of dollars worth of IP for a few cents. The launch of GPT3 has created a lot of startups and opened the world to new possibilities which were only accessible to large organizations before. In my opinion, it’s the first mass adoption AI commercial product.

Despite being in the AI game, I was mind blown by its output. For short form text generation and other intelligence problems. It was indistinguishable from humans. If you are interested in knowing more about this piece of technology. I would highly recommend reading their paper.

Fun Fact: Do you know Elon Musk was one of the founders of Open AI but he quit later to avoid potential conflicts with Tesla.

Understanding Key Concepts For Use Of GPT 3

There are 4 things you need to understand about the usage of GPT-3 to use it with confidence

- Prompt - It refers to the input you give your model.

- Token - 1 token is approximately 4 characters or 0.75 words(most models this is 2048 tokens or about 1500 words)

- Model - The model you choose to process your Prompt

- Fine Tuning - Customizing your model to get better outputs.

What Models Does GPT 3 Offer?

Although, Open AI offers a lot of products and GPT3 is just one of them. Two notable ones are Codex which powers GitHub Co-pilot and Dalle-2 which generates amazing images by describing them in text. But GPT 3 has 4 models namely Davinci, Curie, Babbage and Ada.

Which GPT 3 model to use and When?

To be honest there is no correct answer. Each model brings a different level of sophistication and finesse. And their pricing is drastically different. It usually depends on your use case. I’ll share my personal experience with an explanation but you should try them all to decide.

- Davinci - The most powerful offering and also the most expensive one at $0.0200/1K tokens. It’s also the only model which got a version 2 release and is the slowest. This model offers the highest level of comprehension.

Use Cases - Content Creation Tools and Anything that requires an understanding of long form content. Rule of Thumb anything that requires the mental capabilities of someone greater than a 13yr old. Go for it. - Curie - Costing at $0.0020/1K tokens. Curie is right there in the sweet spot. It’s not as capable as Davinci but is faster which makes it ideal for APIs and Tools. You know for things that require faster inference.

Use Cases - Complex NLP tasks. I used it for grammar correction and Named Entity Recognition, Topic Extraction, etc from the large text. - Babbage - Costing $0.0005/1K tokens. Babbage is a good enough model for basic NLP tasks. I use it for working with smaller text(a couple of lines). I personally never use it for generation tasks but only for classification and extraction. Have an API that uses Basic NLP that doesn’t get used enough to justify the server cost use Babbage.

Use Cases - Sentimental Analysis, Zero-Shot Topic Classification, and very basic summarization(with fine tuning). - Ada - It’s the fastest model offered and also the cheapest at $0.0004/1K tokens. As the price and performance difference is not that much from Babbage most of the time I end up using Babbage. I used it once alongside WebScrapping.

Use cases - Basic NLP at a scale I have used it for keyword extraction from webpages for a case study.

What Are Different GPT-3 Configurations?

Regardless of what model you use or how you use it(via Playground or API). There are some standard configurations. It’s important to understand these as it affects the desired outcome.

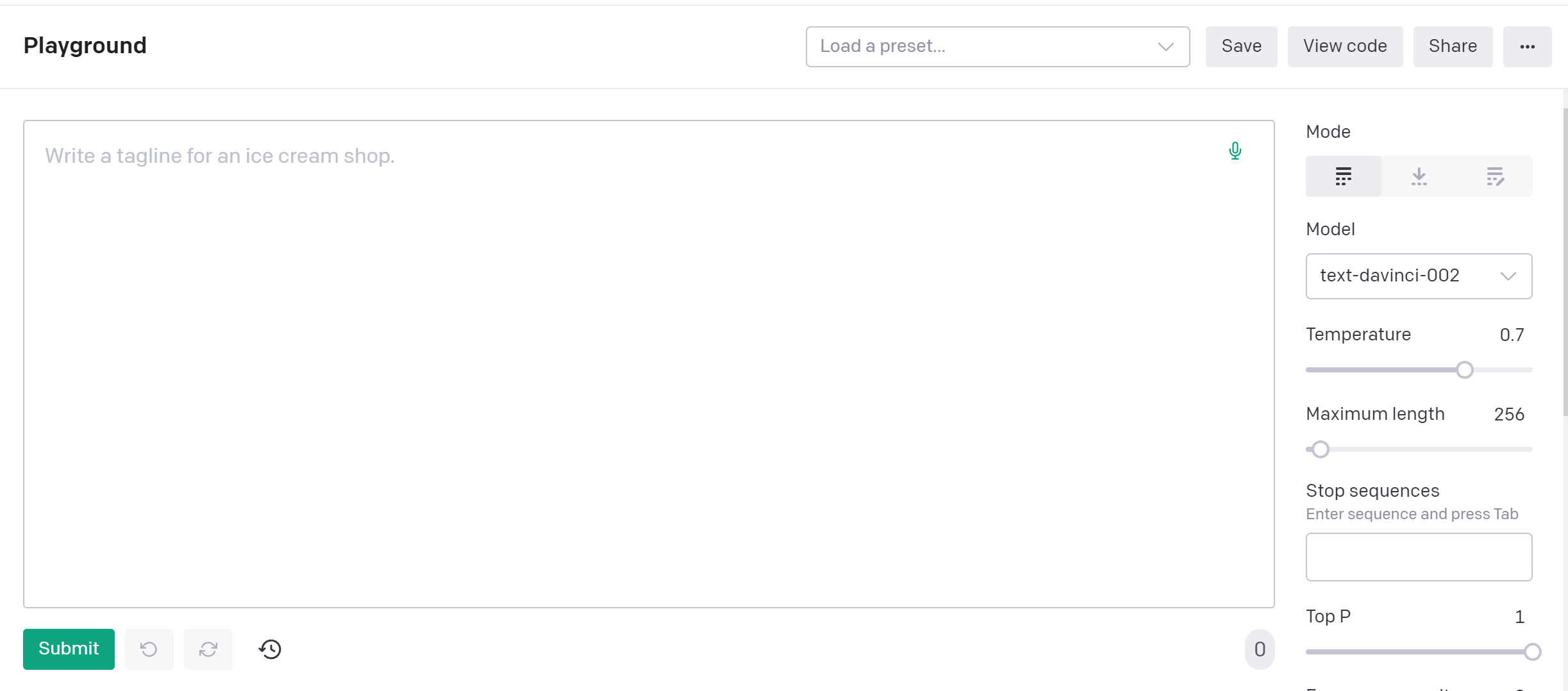

In case you haven’t seen the Playground this is how it looks 👇. The options on the right are the ones we are talking about.

- Mode - Open Ai offers 3 modes. Completion, Insert and Edit. Completion is the most prominent one the rest 2 are still in Beta(at the time of this article.) For most use cases completion will do the job, It takes a prompt and predicts the next sentences. It can be used for generation, classification, etc. Consider Insert as a fill in the blanks kind of situation. I haven’t found a use case to test this and Edit thoroughly. Most of the things edit does can be done by Completion.

- Model - Model helps you select the correct model to process your request. We already know about the models from earlier in the article.

- Temperature - It is a scale between 0-1 that determines the “randomness”(term used by Open AI) of the prompt. I consider it as a creative scale. The more the temperature the more creative the prompt will be. For text generation, the ideal value is between 0.6-0.8. Sometimes if the prompt is not strong or descriptive enough the models can output garbage at a higher temperature. Whereas at lesser temperatures the model tends to repeat itself. So experiment with it. An ideal range is between 0.4-0.8 for most of the tasks.

- Maximum Length - Maximum length equals the prompt+output. It ranges from 1 to 4,000. It depends from model to model. Davinci supports the maximum token. Sometimes, it becomes very tricky to set this if the prompt is dynamic. It’s always a good idea to have an upper limit on your prompt via code. As the APIs are billed per token the larger the prompt or output the higher you are billed.

- Stop Sequences - Stop Sequences are text patterns where the model stops generating tokens. What does it mean for us? This is not verified by Open Ai but in my experience. These stop sequences act as a breaker in your prompt. Effectively using them can vary the output drastically.Eg. Let’s consider the Stop Sequence ########. You can break a prompt like this.

Generate A Summary for the following Text

########

Text: <Some text to summarize>

########

Summary:

Do not take my word for it. Checkout, the prompts from examples Open AI has given - Top P - TLDR; Leave it at 1. Technical stuff ahead ⚠️

Remember, How I said temperature increases creativity? There is something known as sampling(it’s like considering). Temperature increases the probability of being assigned to each sample. Generally, all models pick K number samples to consider(This is known as Top K). Top P(or Nucleus Sampling) used by GPT 3 focuses on the smallest amount of samples(words) whose combined probability is greater than the input. So Top P combined with temperature controls the creativity of the output. So the next word predicted(roughly) = Sum(temperature * Probability of Word) >= Top P. Keeping this at max(1) ensures the maximum samples are considered. - Frequency Penalty - It’s a value between 0-2. This tells GPT 3 to penalize the set of words that have already appeared in the prompt or prediction. So if you do not want the same phrases to appear increase this value. GPT 3 models are notorious for repeating sentences. I generally keep this value between 0.5-1.5 while generation. Frequency Penalty decreases the probability of phrase repetition. It’s probabilistic, not definitive.Keep it on the lesser side for a summary, 0 for classification, and approx 1 for text generation.

- Presence Penalty - It’s very similar to frequency penalty but it’s definitive according to Open Ai. The higher the value of this scale the more likely the output will talk about new topics. You need to tweak these to get the desired output. Keep it between 0-1 for summarization, classification and chatbots, and over it for generational tasks.

- Best Of - This refers to the number of attempts the model will take to generate an output. Each generation is chargeable so if you set this value to 3 your API cost will be 3X. I generally tend to not use this option. But you might want to consider this if you are offering the output as a solution and not as a suggestion. Max, I will go is 2 I didn’t see an improvement in output with the increase in Best Of. I would recommend using it(if you want to) with lower models like Babbage and Ada.

- Inject Start Here - It’s a text input. That adds text to the end of your prompt. You can easily add that text to your prompt. It’s somewhat helpful if you use do the same task in Playground and save the preferences.

- Inject Restart Here - It’s too a text input that adds the added text to the model output. Again, I barely use it.

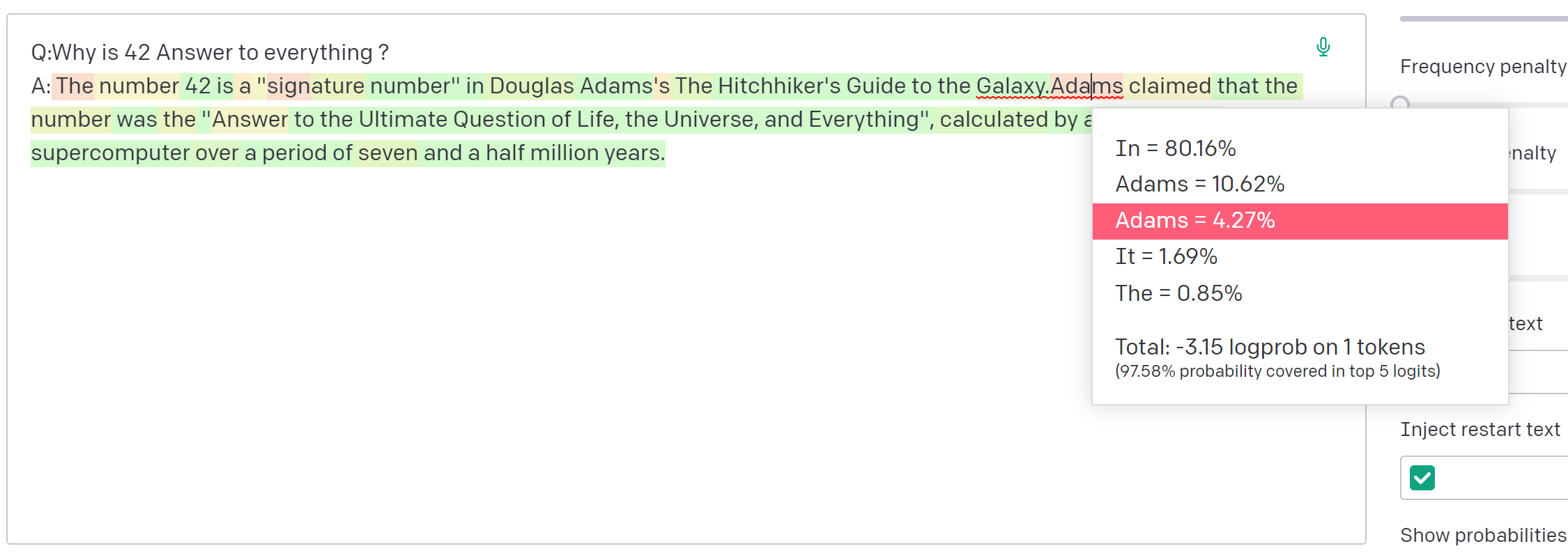

- Show Probabilities - This is an interesting one. I always choose the ” Full Spectrum \“ option. This is helpful if you want to debug the output. It color-coats the output with the probability of the word being generated. It also shows the alternative to that word. It’s super helpful when you are generating text or doing classification in the Playground. It looks something like this 👇

Using GPT-3 in Your Applications

Your application will be interfacing with GPT 3 using an API. There are a lot of SDKs including a rest API(All SDKs are a wrapper on these APIs), but my preferred one is Python. By default, each account is limited to limited calls(generally it is 120$) and if you want to use this API in production you have to request production access. Open AI cares a lot about how its models are being used, so there is a strict approval process that your application needs to pass in order to get a production access.

I will be explaining everything here in Python but replicating it in other languages is very easy. I have used GPT 3 in NodeJs and Go but I think there are SDKs for other languages as well. Eg PHP, C#, etc.

Getting your API key

GPT 3 is officially out of Beta and that means anyone can signup for it without joining a waitlist. It use to be a whole thing earlier. I personally got my access by emailing the CTO but now you can just grab one by signing up with Open AI.

Once you are in the dashboard. You can get your key by clicking on the top right corner where your username/profile picture is. Then choose View API Keys from the dropdown.

How to use GPT-3 with Python

Let’s start by installing the OpenAIs SDK

1pip install --upgrade openai

Once the SDK is installed let's create a small python file for calling our API. I personally prefer a class base approach. Let’s make a file named open_ai.py

12import openai3MAX_TOKENS = 10004MODEL = "text-davinci-002"5TEMPERATURE = 0.76# Add Key Here7API_TOKEN = ""89class OpenAi:10 def __init__(self, max_tokens=MAX_TOKENS, temperature=TEMPERATURE) -> None:11 openai.api_key = API_TOKEN12 self.model = MODEL13 self.max_tokens = max_tokens14 self.temperature = temperature1516 def generate(self, text: str) -> str:17 response = openai.Completion.create(18 engine=MODEL,19 prompt=text,20 temperature=self.temperature,21 max_tokens=self.max_tokens,22 top_p=1,23 best_of=1,24 frequency_penalty=0,25 presence_penalty=0,26 stop=["#", "\"\"\""]27 )28 return response["choices"][0]["text"].strip()29

I prefer to keep this kind of file in a lib folder. Feel free to save it wherever you like. An important thing to remember is that each option in this API is an exact replica of the options in the playground. So it’s highly recommended to play around there before the options. These particular options were used for a generative problem.

Now you can start using it by

1from open_ai import OpenAi2ai = OpenAi()3prompt = "Generate an inspiration quote \nQuote:"4quote = ai.generate(prompt)5print(quote)

This was a small example that will help you get started. Have Fun and play around with your prompts 😊

How to get your GPT 3 application into production?

OpenAi is really diligent in how its models are used. By default everyone's account is limited to certain usage (generally 120$) which is clearly not enough for production usage. They have tonnes of guidelines and recommendations on how to get your application approved for production. Here are my top picks to keep in mind while getting your submitting your application. Follow these you should be go

- Make sure your SAAS/API is online and open to the web with at least 10 users.

- State your API usage as precisely as possible.

- Open AI claims to have a 90% acceptance rate but generative text usage tends to have a higher rejection rate. Regardless make sure to state how the user will be interacting with your application and what steps you have taken to prevent malicious use.

- Be sure to mention that you are passing a user param or User Identity in your API calls.

- Make sure your prompts and usage are compliant with their content policy. To be the content policy is like a common courtesy kind of situation. Just make sure users won’t be using your API for nasty purposes. If you get a warning from Open AI block that user.

- Always have some kind of rate limit on API usage.

Pro Tip: Even though you are use case is approved there is a chance you can get a rate limit message from Open AI under their fair usage policy. So make sure to handle that case.

Fine Tuning A GPT 3 Model

GPT 3 models are already trained on a very large dataset. Prompts are how you customize their outputs(Few Shot Learning). But sometimes Prompts are not enough to the most out of it. So GPT 3 provides a customization API that accepts an array of prompts and ideal outputs to further customize the model. Consider them as permanent prompts.

When you should fine tune a GPT 3 model?

Fine tuning is highly recommended for production models which serve a specific purpose. For most businesses using GPT 3 their prompt is their Intellectual property.

Do You know you can extract the prompt someone is using? It’s really not that hard.

Anyways, There are a lot of upsides to using fine tuning.

- Protecting your prompt

- Cheaper queries. As you require a shorter prompt to instruct the model.

- GPT 3 claims fine-tuned models have faster inference. The performance gain wasn’t that significant for me.

- Higher quality results than prompt design

Preparing data for fine tuning

Open AI expects the data to be in a JSON-L format(It’s like JSON but each object is in a single line). It should be an array of objects, where each object contains a prompt and an ideal completion. You don’t need to worry about the formatting as OpenAI SDK takes care of it and accepts CSV, TSV, XLSX, and JSON too.

1{"prompt": "<prompt text>", "completion": "<ideal generated text>"}2{"prompt": "<prompt text>", "completion": "<ideal generated text>"}3{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

Tips and Guidelines for Fine tuning

- Prompt + Completion should be less than 2048 tokens(~1500 words).

- Break down your main use case into sub-cases. With each sub-case having > 100 examples.

- I can not emphasize this enough, Use a stop sequence at the end of each completion. Eg. \n\n###\n\n. Use the same stop sequence while making the request.

- Create these prompts and completion manually. Spend some time on it. It’s gonna pay in the long run. Quality > Quantity.

How to fine tune GPT 3 using Python?

Python is my go-to choice when it comes to anything machine learning. So 🤷. Open AI also has a solid SDK for python.

Installing Open AI SDK

1pip install --upgrade openai

In your terminal export your openai key. If you don’t have a key yet. Get your key here.

1export OPENAI_API_KEY="<OPENAI_API_KEY>"

Export your data file in any of the supported formats (I prefer JSON)

1openai tools fine_tunes.prepare_data -f <LOCAL_FILE_PATH>

You are now all set to fine-tune your model. Choose a base model according to your use case. When in doubt use Davinci

1openai api fine_tunes.create -t <TRAIN_FILE_ID_OR_PATH> -m <BASE_MODEL>

Fine tuning takes some time. You can check your progress by

1openai api fine_tunes.get -i <YOUR_FINE_TUNE_JOB_ID>

That’s it you are all set. Just pass your model identifier in the model param in your calls.

Common Use Cases For GPT 3

- Content Writing, SEO, and Text Spinner Tools

- Classification and Sentimental Analysis Tools

- NER(Named Entity Recognition), Address and Pattern detection in large texts

- Chatbots and Knowledge Base

- Translation tools

- Natural Language to Code, Database queries, etc.

- Summarization and Question Answering

These are just the tip of the iceberg. Use your imagination, the possibilities are limitless.

Alternatives To GPT 3

To be honest there are not a lot of alternatives to GPT 3 which offer a complete hosted solution and performance but here are some notable mentions which I have personally used(except the last 2).

- GPT J - A hosted 6 Billion model at Huggingface offered by Eluther AI. An alternate to Curie or Ada in some cases like NLP, classification, etc.

- GPT NeoX - A powerful 20 Billion model. Could get really expensive to fine tune requiring 48 GB of VRAM. I have personally used, trained and fine tuned it. But I had access to a lot of powerful machines. If you are planning to use AI at scale you can consider this.

- BigScience - Offered and build via Huggingface this massive model is probably the closest to GPT 3. It’s trained on a very large dataset and performs decently for generative problems.

- BERT - A relatively older model by Google available via Huggingface inference API. I still use it for NLP tasks(clustering and topic detection) it gets the job done.

- T5 - An offering from Google. This model architecture performs surprisingly well. If you have the hardware to fine tune it. You can test it out via the inference API of Huggingface

- NLLB - No Language Left Behind is a model offered by Meta. The model is open-sourced but again the computational power it requires makes it unfeasible for common users. There is no inference API yet but it’s definitely something to keep an eye on.

- MUM - It’s a successor to BERT. The model is not out yet in public but I believe it will be open-sourced soon. Something noteworthy to keep in mind.

Conclusion

GPT 3 offers quality and accessible AI at scale. Hosted models like these have opened a lot of possibilities that were only accessible to large organizations before. I would highly recommend using AI with caution and with responsibility. It’s an amazing piece of technology that you should definitely check out.

If you are planning to use this in your SAAS this article should have cleared a lot of things. If you are still confused feel free to Get In Touch.

Since you read so far. Here is a Golden Nugget for you

Microsoft has a big stake in OpenAI and it offers 1,000$ credits for OpenAI if you apply to their Startup Programme.

Happy Building 😊